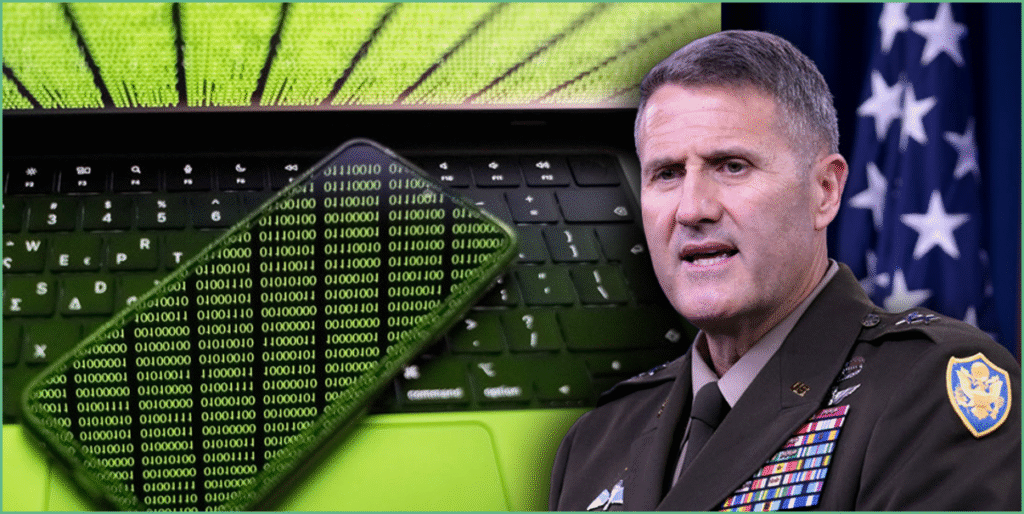

The Army has pushed back on worries that a general’s offhand comment meant machines will replace commanders, clarifying that experiments with generative AI are about learning and safer modernization, not surrendering authority. Major General William “Hank” Taylor’s remarks sparked headlines after he mentioned using an AI chatbot to help build models, and the service moved quickly to explain how those experiments fit into broader efforts to improve timely decision-making. At the same time, separate training on battle-ready offline tools shows the military is testing practical aids that deliver mission details to troops without handing over control.

At a public roundtable, Taylor said “Chat and I” have become “really close lately,” a turn of phrase that grabbed attention and raised questions about what leaders might be sharing with algorithms. Reporters wanted specifics because Taylor commands thousands of troops and his decisions have real consequences, so the comment could not be left vague. The Army’s follow-up described the remark as part of “ongoing modernization efforts” focused on assisting leaders to make informed, timely choices rather than automating command.

The service spelled out that “All operational and personnel decisions remain the sole responsibility of commanders and their staff, guided by Army policy, regulation, and professional judgment,” and restated that leaders will stay in charge. Officials stressed they are looking for “trusted, secure, and compliant systems that enhance — not replace — human decision-making.” That language is meant to reassure rank-and-file soldiers and the public that lines of authority and accountability remain intact even as tools evolve.

Officials also made it clear Taylor has been experimenting broadly to understand generative AI, using publicly available tools and HQDA-approved large language models to see how secure, compliant systems might help with leadership development or efficiency. The Army said Taylor does not endorse any particular commercial platform, and the service declined to confirm whether he referenced ChatGPT specifically during the exchange with reporters. The point from leadership is simple: learn the tech, test the safeguards, and do not let flashy demos outpace policy.

Meanwhile the Pentagon is testing practical, offline-capable chatbots for troops in the field, a program designed to deliver instant data without needing continuous internet access. EdgeRunner AI was used in training at Fort Carson and Fort Riley to give soldiers mission objectives, coordinates, and other critical details in austere environments. Those experiments aim at tactical usefulness and resilience on the ground, not turning algorithms into commanders.

The Army explicitly warned it is not considering “delegating command authority to an algorithm or chatbot,” and that position aligns with a conservative view that human judgment should remain paramount in matters of life and death. From a Republican perspective, that restraint is necessary because military command requires moral and legal responsibility that machines cannot assume. We should be open to tools that boost readiness and protect troops, but skeptical of any move that would dilute civilian control or commander accountability.

“MG Taylor’s engagement with HQDA-approved AI platforms reflects a forward-thinking approach to leadership and modernization,” the army representative concluded. “By responsibly experimenting with these emerging tools, he is helping the Army explore how artificial intelligence can strengthen decision-making, improve efficiency, and prepare leaders for the evolving demands of the modern battlefield.”

1 Comment

This appears to be “much ado about nothing.” Even back in the 80s when we had 2nd generation fire control equipment, we told the computer what the engagement criteria was and that when it was met to send the information in the form of an alert, to the decision maker for review and a “shoot or no shoot” decision. AI is certainly more sophisticated and faster but doesn’t change that basic relationship. I’m glad senior leaders are fooling around with it so they can learn what it can do to help both their quality and speed in making decisions.